Google Cloud Platform observability with Grepr

To enhance your Google Cloud Platform (GCP) observability, you can stream GCP logs to Grepr, process and transform the logs using Grepr’s powerful features, then forward the processed logs to any Grepr-supported observability platform or tool, such as Datadog, Splunk, or New Relic. The Grepr integration with GCP uses Google’s Pub/Sub to Datadog Dataflow template with a configured Grepr Datadog integration, and requires only minimal configuration changes to route logs through a Grepr pipeline. To learn more about the Pub/Sub to Datadog feature, see Google’s documentation .

Requirements

The integration with GCP requires a Datadog integration configured in Grepr, including a valid Datadog API key. However, when you configure a Grepr pipeline using this integration, you can use any Grepr-supported sink. For example, a pipeline that sources GCP logs with a Datadog integration can use a Splunk integration as a sink.

To integrate Grepr with GCP and Datadog, you must have:

- A Datadog API key for your account. You use the same API key in your Grepr integration and the configuration for GCP components.

- A Datadog integration configured in Grepr. See the Datadog integration documentation.

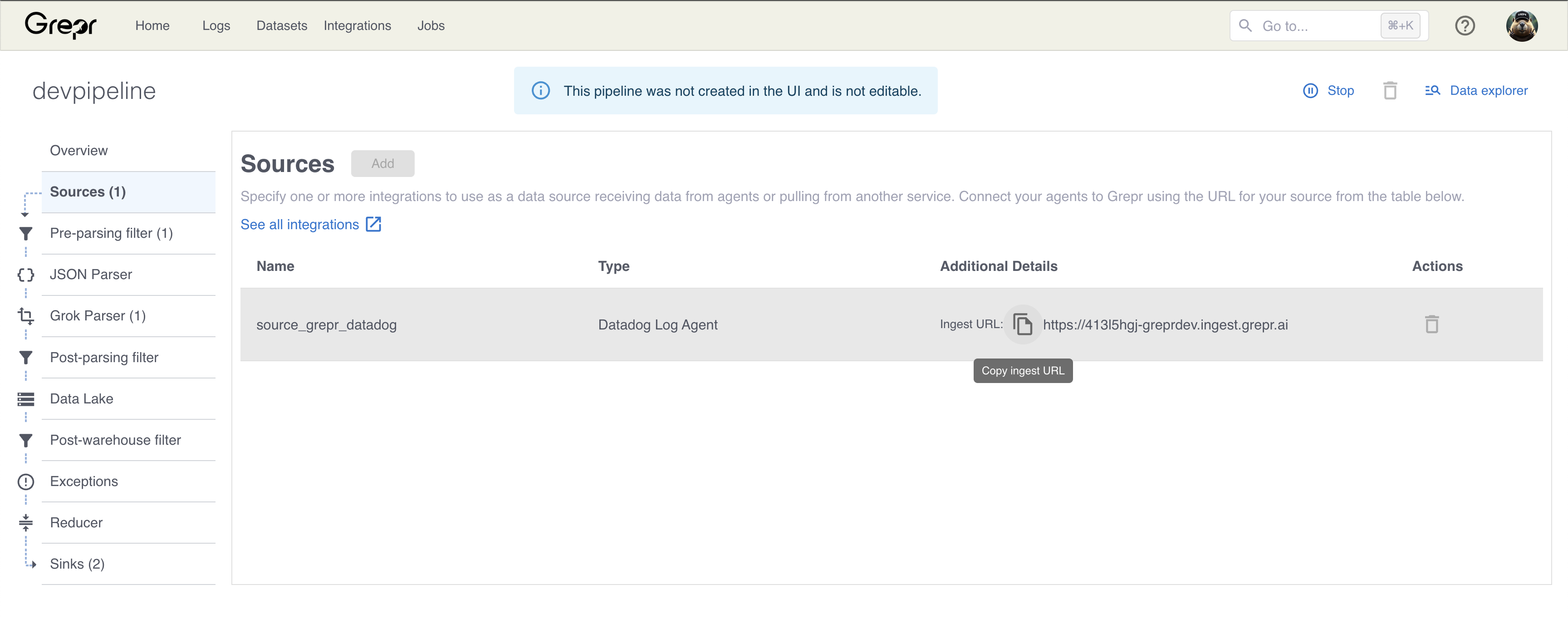

- A Grepr ingestion URL. This URL is available when you create a Grepr pipeline using the Datadog integration. To learn how to create a Grepr pipeline, see the Build your first Grepr pipeline tutorial.

- A configured GCP to Datadog infrastructure. See Stream logs from Google Cloud to Datadog . Make sure you have:

- A Pub/Sub topic and subscription for collecting logs and sending them to Grepr.

- A GCP Cloud Logging sink configured to route logs to your Pub/Sub topic.

- Correct GCP IAM permissions.

Configure the GCP and Datadog integration with Grepr

To configure the integration, you modify the Google Pub/Sub to Datadog Dataflow template to send logs to Grepr instead of directly to Datadog:

- In the GCP Console, go to Dataflow > Jobs > Create Job From Template.

- Select Pub/Sub to Datadog from the available templates.

- In Required Parameters, enter the following value:

Datadog Logs API URL: <your-grepr-ingestion-url>

- In Optional Parameters, enter the following value:

Logs API key: <your-datadog-api-key>

The Logs API key parameter in your Dataflow job must exactly match the Datadog API key configured in your Grepr Datadog integration. Mismatched API keys will cause authentication failures and prevent log delivery.

Example Configuration

# Standard Datadog configuration:

url: https://http-intake.logs.datadoghq.com/api/v2/logs

apiKey: <datadog-api-key>

# Modified for Grepr:

url: https://<integration-id>-<org-id>.ingest.grepr.ai

apiKey: <same-datadog-api-key>To get the Grepr ingestion URL, go to your pipeline’s source configuration page in the Grepr UI and click Copy ingest URL.

Verifying the integration

After deploying your Dataflow job:

- Check Dataflow Status: Verify the job is running without errors in the GCP Console.

- Monitor Grepr Pipeline: In the Grepr UI, confirm your pipeline shows active log ingestion and processing metrics.

- Verify Datadog Delivery: Check that processed logs are arriving in Datadog with tags applied by Grepr. For example,

processor:grepr.

Troubleshooting

Common Issues

- Authentication Errors: Verify the Datadog API key matches between the Grepr integration and the Dataflow job.

- Connection Failures: Ensure Dataflow workers can reach the Grepr ingestion endpoint.

- Missing Logs: Verify the Cloud Logging sink is properly routing logs to your Pub/Sub topic.

Monitoring

Monitor the health of the integration with:

- GCP Dataflow job metrics and logs.

- Grepr pipeline throughput and processing metrics.

- Datadog log intake monitoring.

- Pub/Sub subscription metrics for backlog monitoring.