Optimizing logs with Grepr’s intelligent aggregation

Grepr’s Log Reducer intelligently identifies patterns in high-volume log streams and aggregates similar messages, providing significant volume reduction while preserving critical information. This approach maintains observability effectiveness while dramatically reducing storage and processing costs.

How log reduction works

The Log Reducer operates through a sophisticated multi-stage process that transforms high-volume log streams into manageable, information-rich summaries:

Core process

- Masking: Replaces dynamic values (e.g., timestamps, IDs, IP addresses) with specific tokens to help group similar messages into consistent patterns.

- Tokenizing: Breaks log messages into semantic tokens based on configurable separators, creating a structured representation that’s optimized for grouping.

- Clustering: Uses similarity metrics to group messages into patterns. The configurable similarity threshold determines how closely messages must match to be considered part of the same pattern. If a pattern cannot be identified, the message passes through unmodified. The reasons that Grepr isn’t able to find a pattern include:

- The clustering process is unable to find patterns that match the message.

- The log message field is empty or null.

- The message is matches a configured exception rule that prevents it from being reduced.

- Sampling: Once a pattern reaches the deduplication threshold, Grepr can either temporarily stop sending messages for that pattern (default behavior) or apply intelligent sampling. See Deduplication threshold and logarithmic sampling for configuration options.

- Summarizing: At the end of each time window, Grepr generates concise summaries that include aggregated messages that matched an identified pattern. Summary messages include:

grepr.patternId: Unique identifier for the pattern, making it easy to find related messages.grepr.rawLogsUrl: Direct link to view all raw messages from the Grepr data lake for this pattern in the Grepr UI.grepr.repeatCount: Count of aggregated messages, useful for metrics and rewriting queries.grepr.receivedTimestamp: Timestamp in milliseconds since the Unix epoch of when the cluster was emitted by Grepr.grepr.firstTimeTimestamp: Timestamp in milliseconds since the Unix epoch of the first message that was part of this summary.grepr.lastTimeTimestamp: Timestamp in milliseconds since the Unix epoch of the last message that was part of this summary.

After processing, the resulting records include the grepr.messagetype tag. The possible values for this tag are:

sample: Indicates that the log message is a sample of the original log messages that matched a specific pattern. Sample messages are sent through unmodified until the deduplication threshold is reached for that pattern.summary: Indicates that the log message is a summary of multiple source log messages that matched a specific pattern. Summary messages are generated at the end of each aggregation window for patterns that have surpassed the deduplication threshold.passthrough: Indicates that the log message did not match any existing patterns and was sent through unmodified.exception: Indicates that the log message matched an exception rule and was sent through unmodified.

Key configuration parameters

You can fine-tune the Log Reducer’s behavior through several key configuration parameters:

-

Aggregation time window: Controls how frequently Grepr processes and summarizes log patterns. The default 2-minute window provides a good balance between real-time visibility and reduction efficiency. During each window, Grepr passes samples of each pattern until the deduplication threshold is reached, then begins aggregation.

-

Exception rules: Rules that let you control exactly which logs should bypass reduction. Exceptions can be defined using queries, pattern matching, or even triggered dynamically based on alerts or user actions.

-

Similarity threshold: Determines how closely messages must match to be considered part of the same pattern. Lower values increase aggregation but may group somewhat different messages together. Higher values create more precise patterns but reduce aggregation efficiency. The default is optimized for most environments, while setting to 100 requires exact matches (except for masked tokens).

-

Deduplication threshold and sampling strategy: Controls when Grepr begins aggregating messages for a pattern and how it handles high-volume patterns. These settings balance visibility of raw logs against reduction efficiency.

-

Attribute aggregation: Configures how associated log attributes are combined when messages are grouped, allowing control over data fidelity and aggregation behavior for attribute key-value pairs.

The UI allows you to configure multiple aspects of the log reduction in different places. Sometimes, these aspects cross multiple operators. If you’re using the API to configure a pipeline you will potentially need to configure multiple operators to achieve the same effect. More details can be found on the LogReducer API schema.

Selective reduction with exceptions

One of Grepr’s most powerful features is its ability to selectively control which logs are reduced. Using exceptions, you can ensure that critical logs always pass through unmodified while still achieving significant volume reduction for routine logs.

Exceptions let you specify precisely which messages or patterns should bypass the reducer or receive special handling. This section covers the different types of exceptions and how to implement them for your specific use cases.

Skip aggregating specific patterns

You can define specific patterns that you don’t want to aggregate by using a query. Any messages matching those queries will skip the reducer completely.

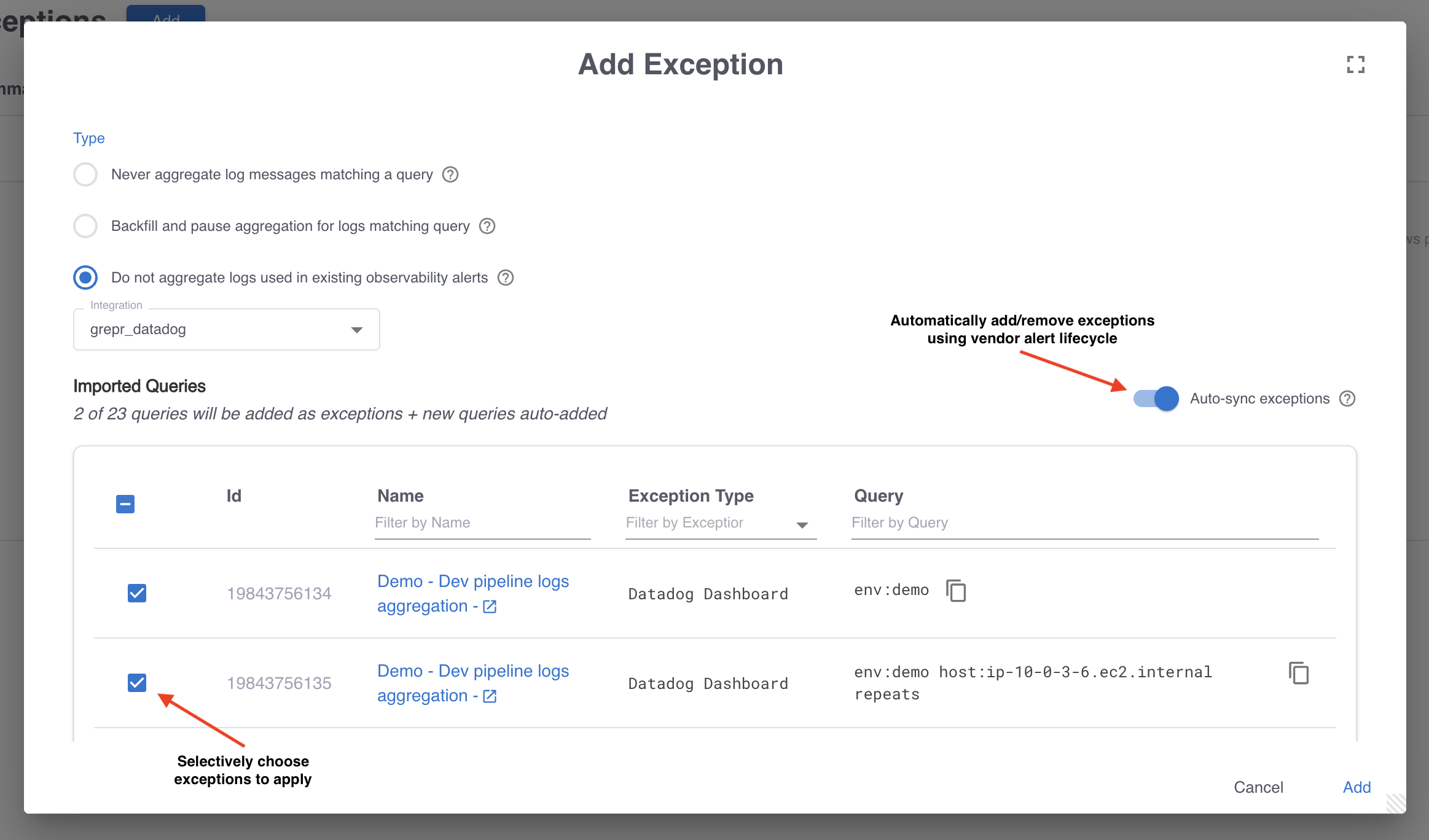

Skip aggregating patterns used in alerts or dashboards

The Log Reducer can be linked to one or more vendor integrations that support exception parsing. These are usually log queries that power existing dashboards or alerts. If enabled, the Log Reducer will automatically avoid aggregating all messages that match parsed exceptions. You can choose from the list of parsed exceptions to apply. You can also navigate to the source of the alert, that Grepr used to create the exception query, by clicking on the navigable url embedded in the Exception name in the list.

Additionally, you can enable Auto-sync exceptions which automatically adds and removes exceptions as you add and remove alerts or dashboards in your vendor.

If you decide to change your alert or dashboard queries to use summarized messages (see Rewrite queries), make sure you add processor:grepr to the new queries so that they do not match logs that did not go through Grepr. This tag is automatically added to all log messages that Grepr sends to external vendors and will never match a raw message. It can tell Grepr that a query for an alert or dashboard has already been rewritten.

Group by specific tags or attributes

By default, the Log Reducer does not group messages with different service tags together. You can configure Grepr to extend the set of tags or attributes that Grepr should not group together via the Grepr UI or API.

Skip aggregating specific tokens

In some cases, you may want to avoid aggregating specific tokens within a message. For example, you might want the URL and the HTTP status code for Apache HTTP logs to always be present in summary messages rather than having aggregated away as masked tokens. This is a two-step process:

- Parse the log message using the Grok Parser so the tokens that you don’t want to aggregate end up in specific tags / attributes.

- Extend the set of tags / attributes Grepr should group messages by to include the new tags / attributes.

Full logs for traces

Grepr can automatically ensure you have full logs for a sample set of traces. In the Grepr UI, this is configured as part of the Log Reducer’s configuration. However, in the API, it’s a separate operation called the Trace Sampler. Use this when you’d like to ensure a full set of logs for some sample of “traces”. A trace here doesn’t need to mean explicitly a trace in the Application Performance Monitoring (APM) sense. Anything that groups logs together could be considered a trace. For example, a trace ID could be a request ID, a user ID, or a session ID.

This works by continuously sampling these IDs at a requested sample ratio. When a trace ID is sampled, Grepr will pass through all log messages that match that trace ID for 20 seconds.

Grepr will use the traces samplers specified in order. Once a message matches for trace sampling, it will not be passed through for any other trace samplers.

Configuration consists of:

- Selector filter: A query that selects the messages that should be passed through the trace sampling operation. Messages that match this query but don’t have a trace ID are not sampled.

- Trace ID path: Path to the trace ID in the event which could be an attribute path or a tag.

- Sample percentage: Percent of traces to backfill. If you always want all logs for any trace IDs (meaning you’d like this value to be 100%), you should instead add the trace IDs as a query exception.

Dynamic query-based exceptions

What if you want to ensure you have a full set of logs before and after some specific error messages? You can do this by configuring a dynamic exception. When Grepr matches a specific query that you configure, Grepr will stop aggregating messages that match the context of the matched message and will backfill messages that match that context.

For example, you can configure Grepr to stop aggregating messages from any host for 1 hour and backfill messages from that host from an hour ago, if that host emits an “ERROR”.

There are four parts to this configuration:

- Trigger query: This is the query that should trigger the exception. For example,

status:error. - Context: What tags or attributes from the matched message should be used as context to select messages that should be excepted? For example,

hostor@request.url. - Exception duration: How long should Grepr stop aggregating messages from the context?

- Backfill duration: How long ago should Grepr backfill matching messages from?

The Grepr UI implements this exception configuration as part of the Log Reducer configuration settings. When using the API, this functionality is managed through the Rule Engine endpoints.

Dynamic callback-based exceptions

These are also called External Triggers.

You can configure Grepr to dynamically stop aggregating messages that match some context for some duration and backfill messages that match that context based on a callback from an external system. For example, you can configure the callback to Grepr when there’s an alert in your monitoring system or when a user creates a support ticket in your ticket management system.

The Grepr UI walks you step by step through the process of creating an API key that can be used to trigger these exceptions and provides a cURL command that can be used. You will need to follow your vendor’s documentation for implementing the API callback that would execute similar to the cURL command. For help, contact support@grepr.ai. This capability is available in the Grepr UI and API.

Deduplication threshold and logarithmic sampling

Every aggregation window, Grepr will start by passing through a configurable number of sample messages unaggregated for each pattern. Once that threshold is crossed for a specific pattern, Grepr by default stops sending messages for that pattern until the end of the aggregation window. Then the lifecycle repeats. This ensures that a base minimum number of raw messages will always pass through unaggregated. Low frequency messages that usually contain important troubleshooting information will pass through unaggregated.

While this behavior maximizes the reduction, log spikes for any messages beyond the dedup threshold disappear. Features in the external log aggregator that depend on counts of messages by pattern (such as Datadog’s “group by pattern” capability) would no longer work well.

Instead, Grepr allows you to sample messages beyond the dedup threshold. Grepr implements “Logarithmic Sampling” that allows noisier patterns to be sampled more heavily than less noisy patterns within the aggregation window. To enable this capability, you configure the logarithm base for the sampler. If the base is set to 2 and the dedup threshold is set to 4, then Grepr will send one additional sample message once the number of messages hits 32 within the aggregation window (since we already sent 4 before the dedup threshold is hit, and 2^4 = 16) another at 64, at 128, etc.

This capability is available through both the UI and API.

Rewrite queries

When you first deploy Grepr’s log reduction, you will likely add exceptions for all existing alerts and dashboards to minimize any disruptions to existing workflows. However, you might find that some of these alerts and dashboards are powered by counts of heavy queries. As an example, you might have a dashboard that’s powered by count of HTTP requests with status code 200. Since this is the normal status code, most HTTP log messages will have status 200. If you add an exception for this dashboard, then your reduction will not be optimal.

To work around this issue, you can rewrite the query that powers that dashboard or alert to use the grepr.repeatCount attribute. This attribute exists on both summary messages and sample messages, making it possible to create metrics from it. See your vendor’s documentation for specifics on creating a metric from a log attribute.

Attribute aggregation

Attribute aggregation empowers you to control how the key-value pairs within the attributes of individual log events are combined when those events are aggregated into a single log pattern. This is crucial because while the message field might be similar enough for aggregation, the associated attributes can vary. Grepr provides two configuration options:

-

Specific attribute path strategies: You can optionally define one or more merge strategies for specific attribute paths. An attribute path uses a dotted string (e.g.,

a.b.c) to target a nested key. For each specified path, you can select from multiple merge strategies:Exact Match,Preserve All,Sample,Sum,Min,Max, orAverage. You can also generate multiple output attributes from a single attribute path, where the output attributes have different aggregations, by applying multiple strategies to the single attribute path.-

For

Preserve AllandSamplestrategies, you must provide alimit(an integer greater than 0) and auniqueflag:- The

limitdetermines the maximum number of values to collect for a given attribute. - The

uniqueflag, when set to true, ensures that only distinct values are collected within the limit, while false allows duplicate values up to the specified limit.

- The

-

Numeric aggregation strategies (

Sum,Min,Max,Average) require no additional configuration and work automatically with numeric values and collections of numeric values.

You do not need to supply a path to a leaf value. You can provide a path to an attribute whose value is itself a map. When a path points to a map-valued attribute, the merge strategy applies to the entire map object. If such a path is specified, then child attributes (e.g.,

a.b.cifa.bis a map) cannot also have their own specific merge strategies defined, and they will not be handled by the default merge strategy. -

-

Default attribute merge strategy: For any attribute keys not explicitly configured with a specific path strategy, the default merge strategy will be applied. This default strategy also allows you to choose between

Exact Match,Preserve All,Sample,Sum,Min,Max, orAverage, with the same configuration options. This default strategy is applied only to leaf-valued attributes.

Grepr supports seven attribute merging strategies, each designed to handle varying attribute values during the aggregation process:

Exact match strategy

The Exact Match strategy ensures that for a given attribute key, its value is only retained if it is identical across all aggregated log events. If the values for the same key differ between events, the value is replaced with a wildcard string (*). This strategy is ideal when you require strict equality for an attribute’s value within an aggregated pattern.

When applied to a map-valued attribute, the Exact Match strategy performs a deep equality check on the entire map object. If the maps are not deeply equal across all aggregated events for that path, the entire map is replaced with a wildcard string (*).

Example (Leaf Value):

Consider two log events being aggregated, with their respective attributes maps:

// Event 1 Attributes

{

"request_id": "abc-123",

"status": "success",

"user": "alice"

}

// Event 2 Attributes

{

"request_id": "abc-123",

"status": "error",

"user": "alice"

}Applying the Exact Match strategy:

// Aggregated Attributes

{

"request_id": "abc-123",

"status": "*",

"user": "alice"

}Example (Map Value - paths: event_type and details):

Consider two log events:

// Event 1 Attributes

{

"event_type": "login",

"details": {

"ip": "192.168.1.1",

"country": "USA"

}

}

// Event 2 Attributes

{

"event_type": "login",

"details": {

"ip": "192.168.1.2",

"country": "USA"

}

}Applying the Exact Match strategy for the event_type and details paths:

// Aggregated Attributes

{

"event_type": "login",

"details": "*" // The maps for 'details' are not deeply equal

}Preserve all strategy

The Preserve All strategy aims to retain all values for a given attribute key up to a configured limit. You can also specify whether the collected values should be unique (unique flag set to true, resulting in a set-like collection) or if all instances should be preserved (unique flag set to false, resulting in a list-like collection). This strategy is useful when you need to see the full spectrum of values for an attribute within an aggregated pattern, without truncation.

When applied to a map-valued attribute, the entire map object is treated as a single “scalar” value for the purpose of the limit. If unique is set to true, maps are deeply compared for uniqueness.

The limit also defines an upper bound on the maximum number of events that can be clustered together for a given pattern during a time window. When the limit is reached, the current cluster is emitted as a summary message, and a new cluster begins for that pattern. This ensures that attribute collections don’t grow unbounded while still preserving important data.

Example (Leaf Value - Limit: 2, Unique: true):

Consider three log events being aggregated:

// Event 1 Attributes

{

"service_tag": "web-01",

"response_time_ms": 100

}

// Event 2 Attributes

{

"service_tag": "web-02",

"response_time_ms": 120

}

// Event 3 Attributes

{

"service_tag": "web-01",

"response_time_ms": 90

}Applying the Preserve All strategy with limit=3 and unique=true for service_tag and response_time_ms:

// Aggregated Attributes

{

"service_tag": ["web-01", "web-02"],

"response_time_ms": [100, 120, 90]

}Example (Map Value - paths: action and metadata, Limit: 2, Unique: true):

Consider two log events:

// Event 1 Attributes

{

"action": "view",

"metadata": {

"page": "/home",

"user_type": "guest"

}

}

// Event 2 Attributes

{

"action": "view",

"metadata": {

"page": "/products",

"user_type": "guest"

}

}

// Event 3 Attributes

{

"action": "view",

"metadata": {

"page": "/home",

"user_type": "guest"

}

}Applying the Preserve All strategy for the action and metadata paths with limit=2 and unique=true:

// Aggregated Attributes

{

"action": "view",

"metadata": [

{

"page": "/home",

"user_type": "guest"

},

{

"page": "/products",

"user_type": "guest"

}

]

}Sample strategy

The Sample strategy collects a sample of attribute values up to a specified limit. Similar to Preserve All, you can control whether the samples collected are unique (unique flag set to true) or if duplicates are allowed (unique flag set to false). After the limit is reached, any additional values for that attribute key are truncated. This strategy is beneficial for high-volume attributes where a representative sample of values is sufficient for understanding, without incurring the overhead of storing all unique values.

When applied to a map-valued attribute, the entire map object is treated as a single “scalar” for the limit and unique considerations, similar to Preserve All but with sampling logic applied.

Example (Leaf Value - Limit: 2, Unique: false):

Consider five log events being aggregated:

// Event 1 Attributes

{

"user_agent": "Chrome",

"session_id": "s1"

}

// Event 2 Attributes

{

"user_agent": "Firefox",

"session_id": "s2"

}

// Event 3 Attributes

{

"user_agent": "Chrome",

"session_id": "s3"

}

// Event 4 Attributes

{

"user_agent": "Safari",

"session_id": "s4"

}Applying the Sample strategy with limit=2 and unique=false for the user_agent and session_id paths:

// Aggregated Attributes (user_agent will contain a sample of up to 2 values)

{

"user_agent": ["Chrome", "Firefox"],

"session_id": ["s1", "s2"]

}Example (Map Value - paths: endpoint and request_details, Limit: 1, Unique: true):

Consider three log events:

// Event 1 Attributes

{

"endpoint": "/api/users",

"request_details": {

"method": "GET",

"params": {}

}

}

// Event 2 Attributes

{

"endpoint": "/api/users",

"request_details": {

"method": "POST",

"params": {"id": 123}

}

}

// Event 3 Attributes

{

"endpoint": "/api/users",

"request_details": {

"method": "GET",

"params": {"id": 1}

}

}Applying the Sample strategy for the endpoint and request_details paths with limit=1 and unique=true:

// Aggregated Attributes

{

"endpoint": "/api/users",

"request_details": [

{

"method": "GET",

"params": {}

}

]

}Sum strategy

The Sum strategy computes the sum of numeric attribute values across all aggregated log events. Non-numeric values are ignored, and the result is stored as a scalar double value. This strategy is ideal for calculating totals such as bytes transferred, request counts, or accumulated durations.

This strategy supports both scalar and collection inputs. Scalar numbers are summed directly, while collections are scanned for all numeric values and summed together before being added to the scalar total.

Example (Scalar Values):

Consider three log events being aggregated:

// Event 1 Attributes

{

"bytes_sent": 1024

}

// Event 2 Attributes

{

"bytes_sent": 2048

}

// Event 3 Attributes

{

"bytes_sent": 512

}Applying the Sum strategy to bytes_sent:

// Aggregated Attributes

{

"bytes_sent": 3584.0

}Example (Mixed Scalars and Collections):

// Event 1 Attributes

{

"request_counts": [100, 50]

}

// Event 2 Attributes

{

"request_counts": 75

}

// Event 3 Attributes

{

"request_counts": [25, 30]

}Applying the Sum strategy to request_counts:

// Aggregated Attributes

{

"request_counts": 280.0

}Min strategy

The Min strategy keeps track of the minimum numeric attribute value across all aggregated log events. Non-numeric values are ignored, and the result is stored as a scalar double value. This strategy is useful for tracking metrics such as minimum response times, smallest payload sizes, or lowest error rates.

This strategy supports both scalar and collection inputs. For scalar numbers, values are compared directly. For collections, all numeric values are scanned to find the minimum value and then compared to the existing minimum.

Example (Scalar Values):

Consider three log events being aggregated:

// Event 1 Attributes

{

"response_time_ms": 150.5

}

// Event 2 Attributes

{

"response_time_ms": 75.2

}

// Event 3 Attributes

{

"response_time_ms": 200.0

}Applying the Min strategy to response_time_ms:

// Aggregated Attributes

{

"response_time_ms": 75.2

}Example (Mixed Scalars and Collections):

// Event 1 Attributes

{

"latencies": [100.5, 200.0]

}

// Event 2 Attributes

{

"latencies": 50.2

}

// Event 3 Attributes

{

"latencies": [75.0, 25.0]

}Applying the Min strategy to latencies:

// Aggregated Attributes

{

"latencies": 25.0

}Max strategy

The Max strategy keeps track of the maximum numeric attribute value across all aggregated log events. Non-numeric values are ignored, and the result is stored as a scalar double value. This strategy is useful for tracking maximum response times, largest payload sizes, or peak error rates.

This strategy supports both scalar and collection inputs. For collections, all numeric values are scanned to find the maximum value.

Example (Scalar Values):

Consider three log events being aggregated:

// Event 1 Attributes

{

"response_time_ms": 150.5

}

// Event 2 Attributes

{

"response_time_ms": 275.8

}

// Event 3 Attributes

{

"response_time_ms": 200.0

}Applying the Max strategy to response_time_ms:

// Aggregated Attributes

{

"response_time_ms": 275.8

}Example (Mixed Scalars and Collections):

// Event 1 Attributes

{

"latencies": [100.5, 200.0]

}

// Event 2 Attributes

{

"latencies": 250.8

}

// Event 3 Attributes

{

"latencies": [75.0, 300.0]

}Applying the Max strategy to latencies:

// Aggregated Attributes

{

"latencies": 300.0

}Average strategy

The Average strategy computes the average (mean) of numeric attribute values across all aggregated log events. Non-numeric values are ignored, and the result is stored as a scalar double value.

The strategy supports both scalar and collection inputs. Each scalar number is treated as a single observation, while collections are scanned for all numeric values, with each treated as a separate observation.

Example (Scalar Values):

Consider three log events being aggregated:

// Event 1 Attributes

{

"response_time_ms": 100.0

}

// Event 2 Attributes

{

"response_time_ms": 200.0

}

// Event 3 Attributes

{

"response_time_ms": 150.0

}Applying the Average strategy to response_time_ms:

// Aggregated Attributes

{

"response_time_ms": 150.0

}Example (Mixed Scalars and Collections):

// Event 1 Attributes

{

"response_times": [100.0, 200.0]

}

// Event 2 Attributes

{

"response_times": 50.0

}

// Event 3 Attributes

{

"response_times": [150.0, 250.0]

}Applying the Average strategy to response_times:

// Aggregated Attributes

{

"response_times": 150.0

}Note: The average is calculated as (100.0 + 200.0 + 50.0 + 150.0 + 250.0) / 5 = 150.0

Multiple strategies for a single attribute

Grepr allows you to apply multiple aggregation strategies to a single attribute path, creating multiple output attributes with different perspectives on the same data. This is particularly useful when you want to track multiple statistics for numeric attributes, such as computing both the sum and average of response times, or preserving individual values while also calculating their maximum.

When you configure multiple strategies for an attribute path:

- First strategy: Writes to both the original attribute name AND a suffixed version (e.g.,

bytes_sentandbytes_sent_sum). - Additional strategies: Write only to suffixed versions (e.g.,

bytes_sent_avg,bytes_sent_max).

This design ensures backward compatibility with the original attribute name while providing additional aggregated perspectives.

Example Configuration:

For the attribute path bytes_sent, you configure three strategies in this order:

SumAverageMax

Input Events:

// Event 1 Attributes

{

"bytes_sent": 1024

}

// Event 2 Attributes

{

"bytes_sent": 2048

}

// Event 3 Attributes

{

"bytes_sent": 512

}Aggregated Output:

// Aggregated Attributes

{

"bytes_sent": 3584.0, // `Sum` (first strategy, uses original name)

"bytes_sent_sum": 3584.0, // `Sum` (first strategy, also uses suffixed name)

"bytes_sent_avg": 1194.67, // `Average` (second strategy)

"bytes_sent_max": 2048.0 // `Max` (third strategy)

}This multi-strategy approach is especially powerful for numeric attributes where you want comprehensive statistics, or when you need to preserve individual values while also computing aggregations.

Common Use Cases:

- HTTP metrics: Apply

Sum,Average,Min, andMaxtoresponse_time_msto get complete latency statistics. - Data transfer: Use

Sumfor total bytes andPreserve All(with a limit) to see individual transfer sizes. - Error rates: Combine

Sumfor total error count withPreserve Allto see which specific error codes occurred. - Performance monitoring: Track

Averageresponse time while preserving outlier values usingPreserve Allwith a unique limit.

Configuring attribute aggregation in the UI

The Grepr UI provides an intuitive interface for configuring attribute aggregation strategies:

Configuring specific attribute paths

To configure merge strategies for specific attribute paths:

- In the Reducer configuration, locate the Attribute Configuration section.

- Under “Attribute merging strategies”, click the plus (+) button to create a new configuration.

- Enter the attribute path using dot notation (e.g.,

response_timeorhttp.status) or JSON array format (e.g.,["http", "status"]). - Click “Add Strategy” to add merge strategies:

- Select the strategy type from the dropdown.

- For

SampleandPreserve All, configure the limit and unique checkbox. - Numeric strategies (

Sum,Min,Max,Average) require no additional configuration. - To add multiple strategies, click “Add Strategy” again and select a different type (duplicates are not allowed).

- The UI displays the output attribute names that will be created (original name and suffixed versions).

- Reorder strategies by dragging them with the handle icon. The order determines which strategy retains the original attribute name.

Setting the default strategy

- In the Reducer configuration, locate the Attribute Configuration section.

- Scroll to the “Default Strategy” subsection at the bottom.

- Click “Add Strategy” to configure one or more default strategies:

Exact Match: No additional configuration needed.SampleorPreserve All: Configure the limit (number of values to keep) and unique checkbox (whether to keep only distinct values).Sum,Min,Max, orAverage: No additional configuration needed.

The default strategy applies to all leaf-valued attributes that don’t have a specific attribute path strategy configured.

Understanding the output

The UI displays which attributes will be created for each configuration:

- Single strategy: Shows the original attribute path (e.g.,

bytes_sent). - Multiple strategies: Shows both the original path and suffixed paths (e.g.,

bytes_sent, bytes_sent_sum, bytes_sent_avg).

The first strategy in a multi-strategy configuration always writes to both the original attribute name and its suffixed version, ensuring backward compatibility while providing multiple aggregation perspectives.